Psychology: Neither doctor nor Freud

We both hold PhDs in psychology, one obtained in England in 1991 and one obtained in Australia in 2019. One of us has worked around the globe in various top research positions, the other has never worked outside Melbourne. Yet we have each heard remarkably similar misconceptions about our discipline – not merely from the public but also from university colleagues.

Sometimes we’re mistaken for psychotherapists (“Can you read my mind?”), even though neither of us has ever seen a client in our lives. And while professional psychologists certainly deliver some forms of evidence-based psychotherapy, there are many other forms (such as those in the psychoanalytic tradition) that professional psychologists do not administer.

Other times, we’ve been confused with psychiatrists (“Can you prescribe opioids?”), even though we don’t administer medication, treat patients or hold medical degrees. We do work in mental health and occasionally publish in psychiatrically focused journals, but psychiatry and psychology are distinct professions.

THE Campus opinion: Teaching students to think for themselves is not enough

Admittedly, we’re not sure how widespread these misconceptions are, as we know of no relevant data. In any case, these are not the misconceptions that irk us the most. Far more infuriating is the belief among some academics that psychology is a “soft” science – the poor, “wishy-washy” cousin of biology, chemistry and physics.

In fact, psychology has a rich experimental history dating back to the 1830s, when visionary German experimentalists such as Gustav Fechner, Hermann von Helmholtz and Wilhelm Wundt set up the first psychology laboratories and diagnostic systems. Since then, psychology has made a number of breakthroughs and discoveries, even if not at the rate of other sciences. Examples include advances in our understanding of human language, attention, memory, learning, emotion, decision-making, visual processing, sleep, intergroup processes, morality and mental illness and its psychosocial treatments (such as cognitive behavioural therapy). We are a long way from understanding any of these complex, dynamic processes completely, but progress has undeniably been made thanks to psychologists’ steadfast devotion to the scientific method.

Today, psychology graduates will struggle to secure positions in either research or the community without strong technical, statistical and mathematical modelling skills. Far from being too “soft”, one of the more common complaints, especially among undergraduates, is that psychology courses are becoming too difficult, too technical and too “sciency”.

What about today’s psychology research community? Yes, we’ve had scandals around data fabrication and replication failures, but we’ve emerged stronger for it. More of us than ever pre-register our work, pool multimodal data to enable adequate statistical power and seek robust replication of findings before making sweeping generalisations about human nature. The vast majority of research psychologists in 2021 take very seriously their responsibility to honestly and rigorously understand mind and behaviour using all available tools.

There are probably many reasons why the “soft science” misconception prevails in some academic quarters. Apart from simple ignorance, another reason is that, unlike other sciences, psychology doesn’t have units of measurement that can be read off observationally. It is also institutionally homeless, being located across the full gamut of faculties, from science and medicine to social science or even humanities.

Regardless of its source, this misconception is potentially harmful. Even in a supposedly “post-facts” era, academics still have public influence. If they misinform the public about what psychology is, people may avoid seeking its help. If confused with psychoanalysis, psychology might suffer the same negative stereotypes (such as that it involves lying on a couch and recounting one’s earliest sexual desires). If confused with psychiatry, the public might be disappointed to find that an appointment with a psychologist means neither seeing a medical doctor nor receiving medication.

Moreover, if academics dismiss psychology’s scientific worth, its standing and funding will fall. This will negatively impact our ability to address pressing societal problems such as climate change, geopolitical instability and global mental health, all of which depend on obtaining a better understanding and ultimately changing human decision-making and behaviour. Indeed, there’s a reason Nietzsche called psychology the “queen of the sciences”.

So, colleagues, talk to those in the School of Psychology, wherever it is housed on your campus. You will find people trained in the importance of experiment and evidence, carefully working away on problems that touch us all, one way or another.

Kim Cornish is Sir John Monash distinguished professor of psychology at the Turner Institute for Brain and Mental Health, Monash University, where Andrew Dawson is a postdoctoral researcher.

Mathematics: Playing the field

When people think of mathematicians, they usually conjure one of three images.

One is the human calculator, quickly working out any desired tip at a restaurant to fractions of a cent. Another is the pi worshipper, standing alone before a dusty chalk altar in monomaniacal pursuit of that mystical number’s 9 quadrillionth digit. Or maybe we are data crunchers, staring into computer monitors as we navigate the ever-expanding universe of binary code churned out by the modern world.

While our friends from academia tend to disregard the first caricature, they remain prone to the other two. What all three have in common is the conception of mathematics as intellectual weightlifting, drawing on a combination of raw brain power and time-honoured methods to get to a predictable answer. This image can be fun to indulge, especially for young people who enjoy seeming superhuman. Yet it is definitely a fantasy. Mathematical research, in reality, is as unpredictable as that in any other STEM field.

Those distorted perceptions often have their origins in the calculus classes we put on as a service to other academic departments. Unfortunately, many such courses focus on the product, not the process, of mathematical thinking – because by the time a mathematical idea becomes useful to another discipline, all of the uncertainty involved in its creation is gone.

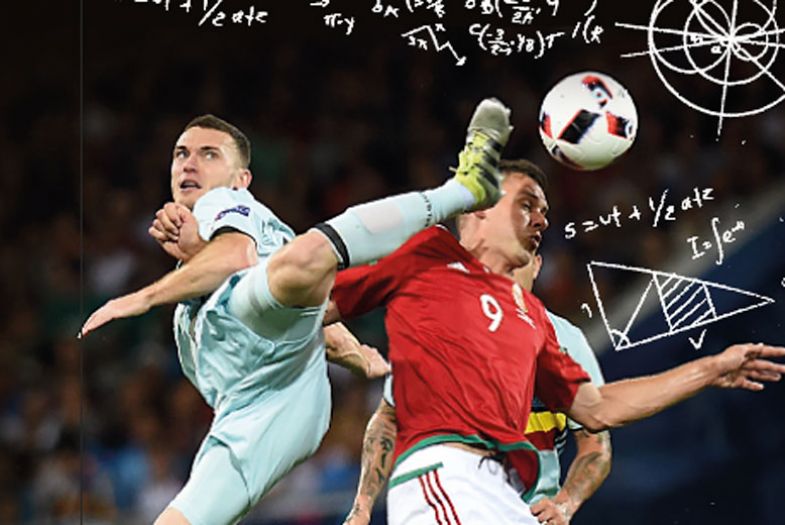

Mathematicians resemble not so much intellectual weightlifters as soccer players. The rules and the object of the game are clear, but there is no predetermined, step-by-step process by which goals can be scored. The successful player must creatively adapt to unexpected challenges. And even all-time greats miss far more often than they score; Fermat’s Last Theorem was posed around 1637 but remained unsolved, despite sustained efforts by many great mathematicians, until 1995 (imagine the grant committee reading that proposed timetable!).

The other important point about soccer, of course, is that one player does not make a team. Yet even administrators often fail to grasp that modern mathematics is a team game too, looking askance at our applications for travel or start-up funds because they assume we have no need to establish collaborations.

One game we play is called “What if?”. We take a problem that we have already solved and ask what would happen if we relaxed a certain assumption or applied our solution to a different context. A variant is “The Polya Process”, based on George Polya’s advice in his famous 1945 book How to Solve It: “If there is a problem you don’t know how to solve, there is a simpler related problem that you do know how to solve. Find it.” We take a problem that is too hard to solve in its entirety and ask: “Can I get around this obstacle instead of going through it?”, or “Is there an assumption I could add that would make this more doable?”

Another game is called “Counterexamples”. We propose a statement (such as “all infinite sets have full measure”) while our friendly adversary proposes examples that would make the statement false (“What about the integers?”). So we alter our statement accordingly (“All uncountable sets have full measure”) and our opponent continues to pick holes (“What about the Cantor set?”). The process repeatedly refines the statement until all possible objections are answered – or the question is fundamentally changed to become tractable. It requires extreme care, rigour and, most importantly, playfulness.

Some mathematical games offer the promise of helping people in real life (such as modelling disease dynamics), while others are pursued just for intellectual challenge. In reality, though, the line between these is usually not so clear. There are countless historical examples where a mathematical idea that first emerged out of pure intellectual curiosity went on to have powerful real-world consequences decades later; examples include Riemannian geometry laying the foundation for general relativity and number theory’s impact on cryptography.

Games are frustrating, exciting and beautiful. They balance curiosity and logic, expanding our minds to see new insights. And they can inspire intense investment, even when they are played with no clear objective. But they aren’t always much use when you’re trying to work out how much to tip your waitress.

Josh Hiller is assistant professor in mathematics and computer science at Adelphi University, New York. Andrew Penland is assistant professor in mathematics and computer science at Western Carolina University.

Media studies: Keeping up with the Kardashians

As a media studies scholar, I have had to make clear to puzzled fellow academics that I don’t analyse the news, measure media effects or write television reviews. And I’ve watched eyebrows furrow as I explain that my opinions on the quality of various films and television programmes are not relevant to my scholarship.

Media studies is a subject area rather than a discipline, encompassing a wide range of topics and a variety of methodologies. The thesis of my MA in communications was on the advertising industry of the 1950s and ’60s, and my PhD in radio-television-film focused on the role of the advertising industry in early broadcasting. I took courses in many departments, including art history, sociology, American studies and history. My training encompassed social scientific, literary and historiographical theories and methodologies, and I have published in journals specialising in cinema, radio, history and American studies. My colleagues can be found in a bewildering number of programmes, including English, speech, film, communication, theatre, journalism, advertising, rhetoric and a variety of social science departments.

But questioning the relevance of media studies to the academic project is still not unusual. Some academics assume that news media is the only form of media worth studying because of the importance of accurate information to the functioning of a democratic society. Media designed for entertainment is suspect, perhaps because it is fictional, and media designed for selling is likewise suspect because it exists for commercial purposes.

This suspicion is embodied in the vast amount of social scientific research on the effects of mass media (and, now, social media), particularly on young people – who, for centuries, have been regarded as unduly susceptible to new media forms. But even social scientific media scholars are often baffled by humanities-based media studies: if not to count units or measure outcomes, thereby quantifying media’s impact and relevance to our society, what is the point?

The earliest humanities work in media was done by scholars trained in literary analysis, who worked to elevate films and television programmes into authored texts worthy of scholarly analysis. For example, by renaming 1940s detective films based on pulp novels as “film noir”, they imparted value to commercial cultural forms previously dismissed by elites. (Notably, much of such cultural legitimation tends to focus on media texts from several decades past.) But some academics continue to question humanities-based approaches as mere film reviewing, fandom or glorified journalism.

There are differences between journalism about media and media studies scholarship. Journalistic film or television criticism cannot include information that could “spoil” the experience for audiences. News coverage of media products serves a promotional purpose and much of it is designed to serve industry interests (trade publications rely on advertising from the industry they cover). Media studies scholars, in contrast, analyse the media text as a whole. And many study how media texts are produced, delving into primary sources and drawing upon historical context for analysis, rather than parroting the views of industry insiders. Others study reception and fan culture, employing a variety of methodologies for analysing audiences’ interpretative processes.

Academia can be a social hierarchy that reflects the values of its elites. My claim that the Kardashian/Jenner family is worthy of study from a variety of disciplinary and methodological perspectives, for instance, has elicited horror among colleagues who find reality television trite and the Kardashians crass. But, as a media studies scholar, I find value not in any personal enjoyment of the Kardashian/Jenner oeuvre, or any claim about its absolute aesthetic quality. I find it, rather, in the opportunity it gives us to understand the culture of its often-overlooked young and female audience. For this demographic, the Kardashians/Jenners have produced significant, long-running cultural texts. They have also marketed a wide range of products, built media organisations and innovated cultural forms – all of which may repay visual, rhetorical, social, cultural and industrial analysis.

Indeed, I would argue that analysing such commercial media forms, practices and institutions is crucial to understanding our world. Therefore, it is central to the academic project.

Cynthia B. Meyers is professor of communication at the College of Mount Saint Vincent.

Creative writing: A damaging narrative

Before Covid, I took my creative writing class to the zoo to practise writing about animals. When a colleague of mine – a lecturer in economics – found out about the trip, she emailed to say she was envious.

“I wish I could do that in my class!” she said. “You know, muck around every day, go on excursions. You don’t know how lucky you are! I’m teaching deadweight loss and Harberger’s triangle, and you’re writing about wombats!”

Her flattery was punctuated with a suspicious number of exclamation marks. Thanks to my training in textual analysis, I could read between the lines. Creative writing is fun, easy, light. Economics is serious, difficult, important.

I carefully crafted my reply: “Creative writing is an academic discipline that requires the same skills as economics: critical thinking, working with constraints, testing different theories, understanding complex relationships, navigating market madness. If your students are bored, they can take CWR101 as an elective.”

A crash course in clear communication could only make for better economists, I thought.

My colleague responded combatively with unrestrained sarcasm and another barrage of exclamation marks: “Sounds great! Thanks! I would love to audit your course!”

I attributed her attitude to a bad day, but still, her email bothered me. I recognised in her bizarre attempt at self-validation a complete misunderstanding of creative writing and what a university writing course entails.

For one, the misguided idea that anything “creative” is a soft option is an unsupported resentment that still lingers in the academy; its negative charge often rubs off on students who come to class with the misconception that a creative writing course will lower their stress and raise their grade point average.

“What this course taught me,” one of my students reflected, “is how difficult it is to construct a good sentence, and how writing is both a discipline and an art.”

“What I know about writing,” another confessed, “is that everything I thought I knew about writing is wrong.”

For most students, the moment of epiphany comes with direct experience and the delayed benefit of hindsight. For most academics, even those with good intentions, the flash of insight never strikes at all.

This has consequences for the institutional perception not only of our teaching but of our research too. Members of creative writing departments face an ongoing and utterly unjust struggle to have creative work recognised as valid research. As University of South Australia dean of research Craig Batty broods, “Imagine, for example, being told that you can’t submit your novel for review as a research output because it didn’t come out of a theoretical problem. Or that you can’t submit your award-winning short film because it’s not long enough. Or that your music composition doesn’t contribute to innovations in the form because it was broadcast commercially.”

For the artist-academic, the quest for recognition is often undermined by the government’s relentless attempts to reduce the vitality and respectability of the arts, often through funding cuts and other de-investments. These cutbacks are not only materially devastating for artists but culturally and symbolically damaging.

For instance, another misconception, both within and outside the university, is that creative writing is a leisure activity, a mere hobby that contributes nothing to the economic bottom line. Another misreading is that writing is cathartic, a thinly disguised therapy for middle-aged women who want to reclaim their sacred selves. Inherent in this dismissal, which often sinks into offensive derision, is the insinuation that creative writing is all about Feelings and Emotions (for the record, writing itself is a method of thinking, and thinking is hard work).

Teaching writing is also hard work – at the zoo as much as in the classroom. Teaching memoir, for instance, requires empathy; teaching correct apostrophe placement requires the patience of a saint and the precision of a surgeon. But it is vital labour. Teaching students to write and read – or more often to rewrite and reread – is essential for success in all disciplines, from international relations to neuroscience.

For this reason, the most infuriating question of all, “Can creative writing be taught?”, is pointless. Creative writing is being taught. And, at my university, as elsewhere, it is more popular than ever.

Kate Cantrell is a lecturer in writing, editing and publishing at the University of Southern Queensland.

Palaeontology: The shadow of the dinosaur

Jurassic Park has a lot to answer for. Since the mid-19th century, dinosaurs have fascinated the public in general and children in particular. Hence, they have been endlessly exploited commercially – never more so than in Steven Spielberg’s 1993 film and its sequels. And that exploitation has inevitably led to all manner of misunderstandings.

The problem for palaeontology, in particular, is that it has become associated – among some fellow academics as well as the general public – with people scraping about in remote scrubland looking for dinosaur bones. In fact, palaeontology encompasses the study of all organisms that died more than 10,000 years ago. Given the virtual absence of any extant morphological, biochemical or behavioural information, it has to use the full range of techniques used by forensic pathologists investigating more recent deaths – computer algorithms, electron microscopes, CT scanners, finite element modelling and isotopic microprobes, alongside the full range of biological disciplines – to reconstruct the appearance, performance and lives of long-extinct species.

A particular irritation for palaeontologists is the assumption perpetrated in books and even some “natural history” television programmes that pretty much any vaguely lizardy prehistoric creature is a dinosaur. Thus, the giant marine reptiles of the Mesozoic Era, such as the dolphin-shaped ichthyosaurs, are often falsely termed “swimming dinosaurs”, while the extraordinary flying reptiles (pterosaurs) of the same era are erroneously called “flying dinosaurs”. In fact, flying dinosaurs are what we today call birds.

Even less forgivable is the popular dinosaur status of the Dimetrodon, a bizarre creature with a tall, skin-covered sail running down its back that went extinct long before the first dinosaurs trod the Earth.

A curious aspect of dinosaurs’ popularity is the increasing numbers of enthusiasts who attend scientific conferences and actively contribute their own ideas about dinosaurs via the internet. This phenomenon started in the 1980s with Robert T. Bakker, whose lovely freehand drawings, handed out to the audience during public lectures, helped to popularise his theory that, far from being the lumbering creatures traditionally imagined, dinosaurs were highly active and very energetic. Bakker courted publicity in his battered hat, flowing beard and cowboy-style outfits, but he had a solid academic background, having worked with eminent Yale palaeontologist John Ostrom, and his theories were confirmed when dinosaurs with feathers or a filamentous covering were discovered in the late 1990s, implying that they would have been endothermic (“warm-blooded”) and capable of high-exercise metabolism.

However, the contributions of the “dinosaurophiles” inspired by Bakker are not always so worthy. They compile online lists of taxa, promote many controversial ideas and add to an already vast collection of dinosaur illustrations (many artists within this community make careers selling their own versions of how dinosaurs looked). Many of these contributions, although interesting in their own right, can be distractions to the academic research community.

Imaginary leaps about the appearance of dinosaurs were also abundant in Jurassic Park, of course. By the early 1990s, we already strongly suspected that the film’s “raptors” should be feather- and filament-covered. However, the best CGI equipment available at the time was unable to reproduce such complexity (and the sequels opted for consistency over accuracy). At least the renowned dinosaur palaeontologist, Jack Horner, who was a consultant on the films, was able to ensure that the CGI dinosaurs had a measure of anatomical veracity – even if their biology, habits and “intelligence” were also purely conjectural.

As for the idea that dinosaurs could be brought back to life via their DNA, this is pure fantasy. Yet people still ask me about it in public lectures. To be fair, such questions have also been encouraged by the publication of scientific reports of the discovery of dinosaur DNA, but these have turned out to be the result of sample contamination by DNA from living sources (such as pollen, shed skin cells or fungal spores). The simple fact is that dinosaur DNA will not be preserved intact after at least 66 million years of decay and burial. So, sadly for me and millions of children, dinosaurs will never be reanimated.

We’ll just have to make do with birdwatching.

David Norman is a reader (emeritus) in the department of Earth sciences at the University of Cambridge, curator (emeritus) of the Sedgwick Museum and Odell fellow in the natural sciences, Christ’s College Cambridge.

Education studies: All the world’s a classroom

What is education? That’s axiomatic, right? But what does it mean to study education? That is a much tougher question – and the field’s main associations in the US and the UK seem to have sidestepped providing a definition on their websites.

Coming up with a good definition wouldn’t be easy. But it is clear that some colleagues could be better informed. Take this recent dead-end introductory social encounter with a colleague from the natural sciences:

Colleague: “Hi. What do you do, then?”

Me: “I do education studies.”

Colleague: “Ah. Right…”

The colleague’s unimpressed look implied that they thought education studies was staid, or even – to use a popular trope – not a “proper” degree.

Education studies may have this conversation-killing image because it is mistakenly assumed, even among fellow academics, to be mainly about prepping students for teaching. But it is not. Teacher education is a separate programme of study – although it, too, is rich, rigorous and extremely challenging.

Education studies is not exclusively nor primarily a vocational programme. Neither is it training. It draws from across the disciplines to study how teaching and learning occurs – not only in schools but also more informally, throughout lifetimes, in wider society. The usual binaries of defining a field of study – such as practical/theoretical, scientific/artistic, vocational/academic or activistic/traditional – are unhelpful because education studies is all of these things.

A particularly exciting, impactful and growing approach is called critical education. This occupies significant space on campuses in North America and Europe, mainly as critical pedagogy. As a co-organiser of the International Conferences on Critical Education and co-editor of the Journal for Critical Education Policy Studies, I am helping it to flourish as part of the mainstream of education studies in the UK, too.

Mainstream modules already have some coverage of the connection between curricula and pedagogy and wider issues of social justice and inequality. But critical education more fully embraces that big picture through explicating the education system and learning more widely in terms of its being anchored strategically in the dominant class’ politics and ideology. It examines education in the context of increasing of inequality and unfairness over the past 40 years of neoliberalism, and it seeks to harness education – formal and informal – in pursuit of social change.

The principle is that critical education is a prerequisite for the future to be better than the past. Students confront such deep questions as: “Why is education the way it is?”, “How can education and learning be changed to promote fairness and equality?” and “What is our role as educationalists to catalyse the changes we want to see?”

But not everybody wants education to be transformative in this way, of course, and the discipline is under fire. There are people outside education studies and the critical education approach who understand that what we do threatens to disrupt the status quo and they would rather we did not. There are private political organisations and governments that want to stifle academics who engage students in critiquing the system and the dominant ideology and politics that drive it.

Yet rather than subdue us, this is the kind of fire that critical educators relish critiquing in our classrooms because it exemplifies the political nature of all education.

That natural sciences colleague of mine really missed out on an important and engaging conversation.

Alpesh Maisuria is associate professor of education policy in critical education at the University of the West of England, Bristol. He also holds various positions of leadership in the global network of scholars advancing critical education.

Nursing studies: A mixed case history

During my long career as a nursing lecturer, I was often confronted by puzzled academic colleagues who couldn’t quite grasp what I could be lecturing about – and how it counted as higher education.

Their image of a nurse was typically something like Hattie Jacques in the Carry On films, keeping hospital wards running through sheer force of personality rather than any higher cognitive faculties.

The funny thing is that some people even within nursing departments are also less than convinced about nursing’s academic prowess.

For a century, nursing in the UK was taught via (paid) apprenticeships in the National Health Service. When it was brought into the academy in the 1980s, the idea was to inject academic rigour and enquiry into a process that was seen as somewhat paternalistic and that, like Hattie, put the emphasis on persona and protocol, rather than insight. No longer would people be merely “trained” for the job: they would be “educated” within a broadly liberal arts framework.

But it isn’t clear that this transformation has actually happened. In 2018, three eminent university nursing professors published an extraordinary editorial in a nursing journal lamenting that “rather than serving as bastions of knowledge generation, professional innovation, curation and dissemination of knowledge, many [university nursing departments] have become corporatised monoliths intent on [producing] commoditised ‘outputs’ that can contribute to the ‘knowledge economy’”.

Yet, they add, “if all that were needed for today’s and tomorrow’s healthcare worlds was an army of new nurses with a paper qualification showing that they were good ‘pairs of hands’ capable of staffing our hospitals, we need not have bothered fighting to get nursing into universities.”

For its part, the government was never entirely convinced that it should consent. In 1988, the then secretary of state for health, John Moore, put on record his misgivings about the possible detrimental effect of the new model on the learning of clinical skills.

The three professors highlight the topsy-turvy world of nursing in the academy 30 years later: “Rather than focusing on the compelling rationales for having nursing in the university – scholarship, research, teaching and service – nursing leaders and their faculty colleagues are faced with the imposition of managerial diktats usually focused on ‘training’, which is invariably about compliance, instead of learning and professional development, which are invariably about thinking, questioning and challenging. Very rarely is there time for true scholarship in its broadest sense – critical thinking, reflection, debate, imagination, curiosity and creativity.”

It is significant that “service” is last on the professors’ list of academic rationales, and their lamentations make clear that the primary motivation for moving nursing into universities was not so much to improve of patient care as to increase the profession’s status.

Yet you might well ask what the purpose is of nursing if you dismiss the value of “good ‘pairs of hands’ capable of staffing our hospitals”. The professors would do well to respond to the 2013 Francis report into shockingly deficient standards of care at Stafford Hospital – which recommended that sufficient practical elements should be incorporated in nursing training to ensure that a consistent national standard is achieved by all trainees.

Just over four years ago, one of Moore’s successors, Jeremy Hunt, announced a new apprenticeship plan for nurses, amid fears that “the routes to a nursing degree currently shut out some of the most caring, compassionate staff in our country. I want those who already work with patients to be able to move into the jobs they really want and I know for many, this means becoming a nurse. Not everyone wants to take time off to study full-time at university, so by creating hundreds of new apprentice nurses, we can help healthcare assistants and others reach their potential.”

Universities have been quick to leap into the provision of nursing apprenticeships themselves, via degree apprenticeships. But, personally, I do think it is time to have a genuine review of where and how nurse preparation should be conducted, focused on the needs of the patient, not the profession.

And if that means admitting that the sceptics among our academic colleagues were right all along about nursing’s place in the academy then so be it.

Ann Bradshaw is a retired senior lecturer in adult nursing at Oxford Brookes University.

POSTSCRIPT:

Print headline: Please don’t let me be misunderstood

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber?