One of the most important trends in scientific publishing over the past decade has been the advent of the open access mega-journal.

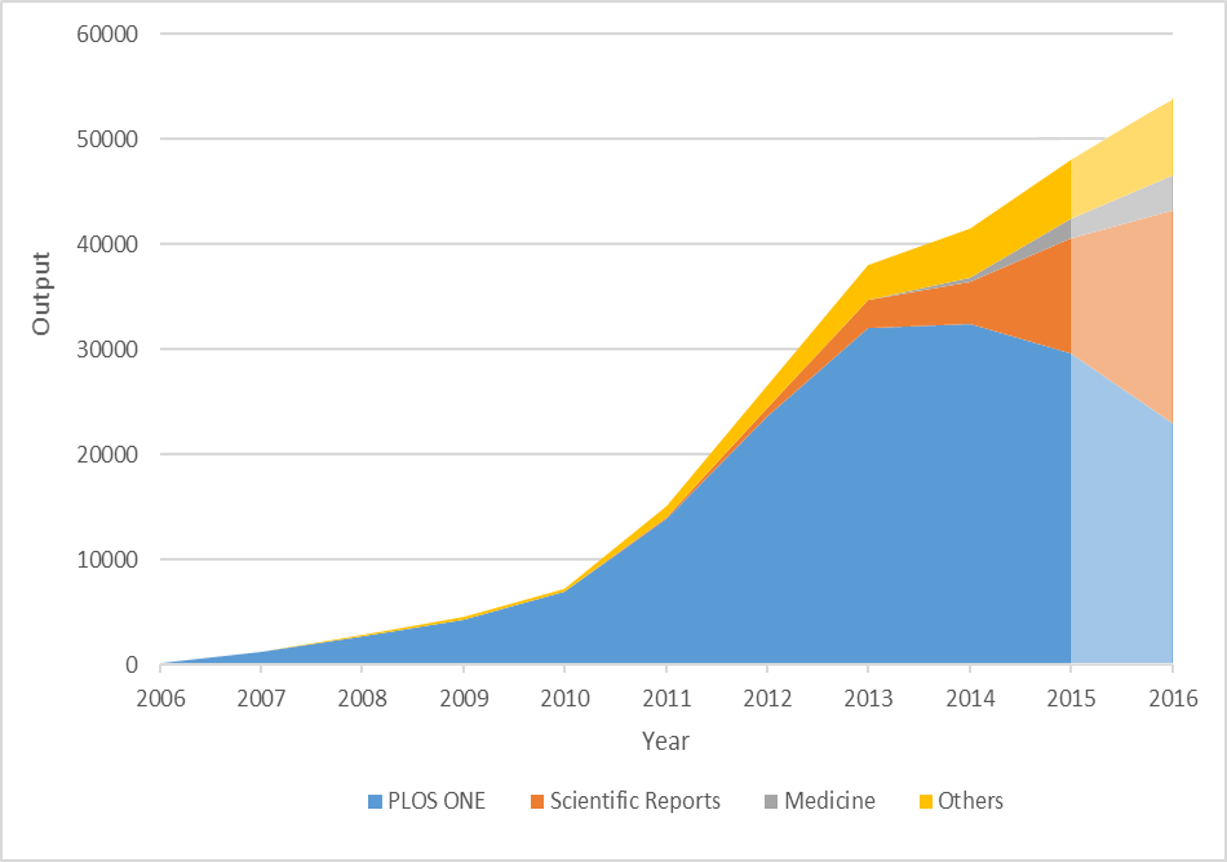

Plos One, still seen as the exemplar mega-journal, is 10 years old this year. At its peak in 2013, it published 31,509 articles – an unprecedented scale of output for a single journal title. The fact that it has for some time been the largest academic journal globally is partly due to its wide scope: Plos One accepts papers right across science, technology, engineering and mathematics subjects (and some social sciences), reversing the 50-year trend for greater and greater specialisation in journal publishing. In recent years, a number of publishers have tried to imitate Plos One but none has been so successful. Until now.

In September, Plos One was overtaken. Nature’s Scientific Reports published 1,940 research articles in that month, compared with Plos One’s 1,756. The figures for August were 1,691 and 1,735, respectively. Scientific Reports has grown rapidly since its launch in 2011, a rise that has coincided with (some have suggested, partly contributed to) a decline in Plos One. Like Plos One, Scientific Reports publishes across STEM, although in reality, the former has more papers in health and life sciences and the latter in physical sciences.

Such journals are only possible in an open access environment. The business model based on pre-publication article processing charges (APCs) is one that allows rapid scaling. Journal income scales in direct relationship to its output, something that does not work for traditional subscriptions. New publishers such as PeerJ have, in a short space of time, gained a respectable foothold in the market. Last year, PeerJ published about 800 articles in the health and life sciences.

A key factor in the model is relatively low rejection rates. Plos One publishes 65 to 70 per cent of the submissions it receives. The wasted (and unfunded) effort of managing peer review for articles that are ultimately not accepted is kept to a minimum. This is possible only because of mega-journals’ particular approach to quality control, perhaps their most controversial feature. Its supporters call it “objective peer review”, its sceptics, “peer review-lite”. Articles are assessed based on their “scientific soundness” only. Consideration is not given to an article’s novelty, importance or interest to a particular subject community. These more subjective judgements (as some see them) are not taken into account at all in the decision to accept or reject, simply, is it good science?

Many would argue that the peer-review carried out by Plos One differs from other journals only in scope, not rigour. The Plos requirement to deposit data alongside the paper also enhances quality, it is argued, since scientific results can be more accurately assessed and, where appropriate, replicated. Good science is actually enhanced, many would argue, by the fact that the approach allows the publication of the null results and replication studies typically rejected by other journals focused on only the novel.

Moreover, the soundness-only approach to peer review represents to many a welcome democratisation of science, moving decisions about what is important and novel from the hands of a few old men (they are normally men) stuck in old paradigms, to the “community” as a whole. Others argue that by dispensing with traditional peer review, mega-journals (and others that do soundness-only peer review) are casting off the valuable filtering function of journals that researchers rely on, even if only to save them time. Mega-journals, it is argued, simply have a lower quality bar.

It might be suggested, however, that mega-journals, far from being in competition to conventional titles, can in fact have a symbiotic relationship with highly selective journals. Mega-journals have been called a “cash cow”, and they can ostensibly create efficiencies, even economies of scale, for publishers. This means that mega-journals can provide financial subsidy for other higher-rejection-rate journals within a publisher’s portfolio. They even potentially solve one of the big conundrums of open access publishing: how to support highly selective titles with an APC business model. Highly selective titles reject more than they accept, but only receive income from accepted papers. In a tiered model of publishing, highly selective titles are given financial subsidy by the same publisher’s mega-journal, which (crucially) in return receives what might be called "reputational subsidy" from the highly selective titles. They can trade off the brand.

This seems to be what is happening at Plos, and it is undoubtedly the case that Scientific Reports receives a massive reputational subsidy from the Nature brand. Other journals such as BMJ Open and AIP Advances may have something similar going on. However, in still other cases, some mega-journals have been set up without a recognisable brand giving them reputational subsidy. In at least some of these cases, the mega-journal seems to be little more than a cascade journal, receiving papers rejected by the publisher’s more selective titles with the attraction to authors of not having to go through the hassle of resubmission elsewhere. Of course, the practice of cascading, now more widely adopted by publishers apart from mega-journals, may be a good way of eliminating waste in the system (minimising the submission-rejection-submission spiral) but it can sometimes look a little like an APC grab.

The prevalence and strength of these views within sections of the scholarly community suggest that quality concerns regarding mega-journals won’t go away. This is not helped by the fact that, at the bottom of the pile, are out-and-out “predatory” titles, willing to publish anything for an APC. Let the buyer beware! In many respects, however, the rise of the predatory journal is the inevitable downside of the lowering of barriers to market entry, which has enabled competition and promoted innovation.

One often forgotten aspect of this innovation is a second component to quality assessment adopted by mega-journals. This is quality assessment post-publication. Evidence of the community’s assessment is seen in its reception of an article – particularly in the use made of the article, the way it is cited and discussed. This can be shown in article-level metrics, which most mega-journals have promoted, while eschewing (publicly, at least) crude journal-level metrics such as the impact factor.

Developments such as this have given rise to further innovation. F1000 Research combines something like the mega-journal model with open post-publication peer review and comment. In the humanities and social sciences, a number of mega-journals have been set up, although they have found the APC model challenging for their contributors. SAGE Open accordingly charges very low APCs, while Open Library of the Humanities, originally set up as a Plos One for the humanities, has rejected the APC model entirely, and relies on sponsorship and membership. The notion of soundness-only peer review is also problematical in the humanities.

What remains to be seen is whether mega-journals, as currently constituted, will prove to be a major innovation that contribute to the reshaping of research publishing in an increasingly open access world, or whether their real importance will lie in being a stepping stone to even more radical forms of scholarly communication. This will partly depend on the extent to which the open access “wild animal” will be domesticated. Signs of that already abound, meaning that any change is more likely to be incremental rather than disruptive.

It is, of course, possible that mega-journals will sink without trace: that probably applies to some of the current smaller hopefuls. But there does now seem to be momentum behind some of larger titles, which means they, at least, are likely to continue to prosper. In the short term, though, what is clear is that the battle to publish the largest journal in the world seems to be swinging towards a new form of a very old journal, Nature.

Stephen Pinfield is professor of information services management at the University of Sheffield. He is currently principal investigator on an AHRC-funded project investigating mega-journals and the future of scholarly communication.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login