The spring budget’s commitment to put the UK at the forefront of supercomputing was a welcome move to put flesh on the bones of the Prime Minister’s vision to cement our place as “a science and technology superpower by 2030”.

The headlines are welcome but we have to ask why it has taken us so long to work out what is blindingly obvious to the US, Germany, China, Singapore and Australia (working together) and Japan, which have spent the past decade planning for the exascale supercomputer revolution. After all, the science superpower ambition was first articulated by Boris Johnson back in 2019.

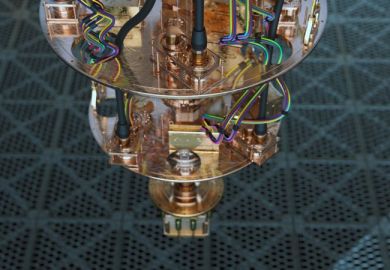

For a dozen years there has been an overwhelming case for an exascale supercomputer in the UK, the nation that led the way in computing thanks to pioneers such as Charles Babbage, Ada Lovelace and Alan Turing. Exascale machines are capable of a billion billion (10 to the power of 18) operations per second, and are anywhere from five to a thousand times more powerful than the older petascale generation.

Because of the fast interconnection between the thousands of computers (nodes) within them, supercomputers will always outperform cloud computing to tackle pressing global challenges – for example, by creating digital twins of factories, fusion reactors, the planet and, in our work, even cells, organs and people. These models can accelerate energy, climate and medical research, respectively.

A recent report on “The Future of Compute”, published this month, pointed out (as have earlier reports) that the UK has lacked a long-term vision. That is why the budget commitments to exascale (along with quantum and AI) is hugely welcome. The Science and Technology Framework drawn up by the newly created Department for Science, Innovation and Technology also promises a more strategic vision.

But if the world’s first exascale machine – the US’ Frontier – is anything to go by, a UK supercomputer will command a hefty price tag of at least half a billion pounds. The same investment again will be required to serve it – software has to be customised for high-performance computers. And we will need new skills, too, which will take time and sustained funding to develop.

The budget document’s headline figure of £900 million for “cutting-edge computing power” seems to fit the bill, but it is billed as being for both exascale and AI. It is unclear whether the government envisages a separate AI initiative or is simply alluding to the fact that an exascale machine that uses processors called GPUs (graphics processing units) is much better suited to AI than the UK’s existing machines are.

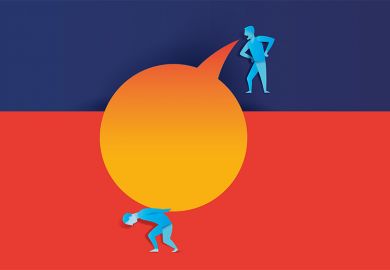

Above all else, we hope that the government has learned the lessons of recent history. The UK’s lack of long-term vision became painfully clear in 2010, when the Engineering and Physical Sciences Research Council (which manages supercomputing in UK) announced that there would be no national supercomputer after 2012.

One of us – Peter Coveney – led the charge to challenge that assertion. In response, the science minister at the time, David Willetts, released about £600 million for supercomputing. But when he stepped down, the momentum was lost and the UK went ahead with Archer and Archer2 in Edinburgh: technological cul-de-sacs that lack GPUs.

As of last November, the UK had only a 1.3 per cent share of the global supercomputing capacity and did not have a supercomputer in the top 25. Until the latest announcement bears fruit, UK researchers will not have direct access to the exascale machines being developed by the European Union; the first, Jupiter, will start working in two years.

Micromanagement by generalists has clouded our view of the future. You have to lobby the government, petition, make special cases and repeat this process endlessly. The budget says the exascale investment in supercomputing is still “subject to the usual business case processes”.

As a result of the short-termism of these processes, we end up with a series of knee-jerk, sticking plaster policies crafted with newspaper headlines in mind, rather than a long term vision. This creates a computing ecosystem that is complex, underpowered and fragmented, as the compute report makes clear. To fix such failings, Sir Paul Nurse argues in his recent review of the UK’s research and innovation landscape that the government “must replace frequent, repetitive, and multi-layered reporting and audit…with a culture of confidence and earned trust”. Academics must be empowered to make big decisions about the investments needed.

As well as boosting research in areas as diverse as climate forecasting, drug development, fusion and the large language AI models that are currently making headlines, exascale computers will drive the development of more energy-efficient subsystems, notably through analogue computing, and bootstrap developments in AI, semiconductors and quantum computing.

Yes, the price will be high, and an exascale machine will be tough to deliver by 2026, as recommended in the recent review. But please let this not be another fix-and-forget policy. It is already time to start thinking about a national zettascale supercomputer.

Peter Coveney is director of the Centre for Computational Science at UCL and Roger Highfield is science director of the Science Museum. They are co-authors of Virtual You: How Building Your Digital Twin Will Revolutionize Medicine and Change Your Life, published on 28 March.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login