The Times Higher Education World University Rankings was created 20 years ago. It has been through various iterations since its formation, but one major component has always been the reputation of an institution, which accounts for one-third of the ranking. We identify the reputation of a university through an annual survey that is distributed to respected academics whose work has been recognised by their peers. We do not allow nomination systems where a third party (not necessarily a published scholar) can receive an invitation.

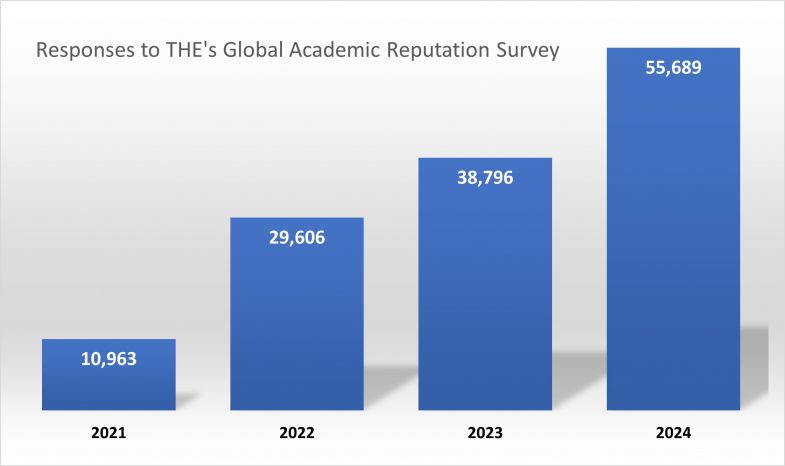

As the number of institutions choosing to participate in the World University Rankings has grown, so has the size of THE’s Global Academic Reputation Survey. In 2021, the last year that we partnered with Elsevier to administer the survey, we received just under 11,000 responses. The following year, we began running the survey in-house and were able to increase the response numbers such that this year’s survey has more than 55,000 respondents.

But as our rankings grow, so does the scrutiny by institutions with regards to their performance. This can, of course, be a positive thing: universities can use the results to develop strategies, and to understand the higher education landscape and their place within it. However, we have become aware that a few universities may be trying to boost rankings performance in ways that are not consistent with the good spirit exemplified by the majority of participating institutions.

Take self-voting, for example, in which academics vote for the institution to which they are affiliated. Generally, we do not have a problem with an academic voting for their own institution. After all, if you think your university is one of the best in the world, why shouldn’t you be allowed to say so? However, if there is any evidence of systematic abuse of this by an institution – for example, instructing its academics to vote in a particular way – that would require intervention from any responsible ranker. Last year, we introduced a self-voting cap to address this, where we limit self-votes to 10 per cent of a university’s total. While this has affected only a handful of institutions, it does rein in any potential abuses and acts as a deterrent, without our needing to take more extreme action.

This then leads us to exploring wider voting patterns. Although the above may reduce the effect of intra-institutional voting, it does not provide any protection against possible relationships being agreed between universities. To fully understand the potential issues, we have undertaken network analysis of voting patterns and have started to look at what we call “respondent diversity”. A university that has votes from far and wide would have a broad spread of votes from various sources, with a more uniform distribution of voters. But where an institution has a high proportion of votes from a small number of universities, and where that pattern is mirrored by those other universities, that could indicate something that requires further inspection and, potentially, action.

Our first step is to look at our ranking focused on reputation. We plan to enhance this by introducing measures that explore voter diversity and reward those universities that have broad respondent bases. Conversely, institutions that have a high percentage of their votes originating from a narrow group of universities will score lower. The exact format of this new metric is yet to be announced, but we will develop this to be as fair to the wider community as possible.

We are also actively working on how to mitigate any effects of voting patterns in our World University Rankings, and associated rankings such as the Arab University Rankings.

We hope that this will actively discourage inappropriate behaviour and, in so doing, maintain the quality of what is the largest academic survey of its kind. Where we detect abuse, we reserve the right to take action up to and including setting reputation scores to zero.

Mark Caddow is a senior data scientist at Times Higher Education.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login