Increasing digital surveillance by institutions could be further marginalising historically disadvantaged students, it has been warned.

Advanced network infrastructure, internet-connected devices and sensors, radio frequency identification, data analytics, and artificial intelligence (AI) are seen as key digital tools to bring about the age of “smart universities,” according to Lindsay Weinberg, a clinical assistant professor at Purdue University.

However, as she writes in her new book Smart University, they also have a “darker side” – with universities increasingly using big data analytics to target “right fit” students.

Speaking to Times Higher Education, Dr Weinberg said this can involve targeting students based on their zip code, those who are most likely to come, those who might not require financial need, or even students who are unlikely to get in to appear more competitive.

“There’s all sorts of ways that issues of bias and discrimination can get reproduced through these types of recruitment efforts,” said the director of the Tech Justice Lab in the John Martinson Honors College.

“The type of student that will have access to higher education and that will actually be successful is going to look like students from dominant groups and students who are already structurally advantaged.”

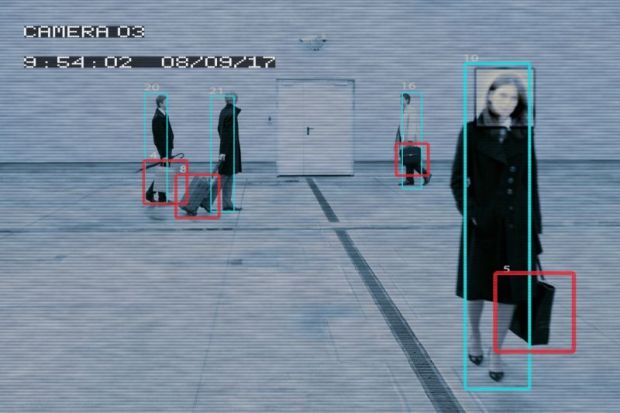

The book also highlights the growing spread of facial recognition on campuses – including Columbus State University, Florida International University, Iowa State University, the University of Alabama, the University of Illinois and the University of Wisconsin.

Backers believe the technology allows administrators to monitor student attendance, reduce time spent checking in and out of buildings, and eventually help lecturers tailor their lessons towards students’ emotional levels.

Dr Weinberg said its prevalence was a “symbol of a much larger problem”, with many universities directly involved in the research behind the technology itself – which is now being disproportionately used for policing or immigration and customs enforcement.

“It’s a really good example of how institutional complicity operates in practice,” she added.

“It’s not like one set of evil administrators who are trying to secretly surveil students…it’s researchers operating perhaps in good faith trying to improve a technology that they think is going to be used for either neutral or good purposes. “

Campus resources on artificial intelligence in higher education

Those in favour of smart universities argue that they need to learn from the data analytics strategies of big technology firms such as Amazon and Netflix, despite their longstanding issues around privacy rights violations and discrimination.

“For universities to keep pace with innovation or to provide the right students for the knowledge economy, there’s this idea that universities essentially need to emulate the practices of industry as well,” said Dr Weinberg.

“With industry, it’s about cost-cutting, it’s about exploitation, it’s about very short-sighted business-based ideas of what success looks like, and I don’t think that that’s what public higher education is really supposed to be about.”

Much attention has recently been paid to the weaponisation of campus security by some colleges to deal with the pro-Palestinian movement.

But Dr Weinberg said that universities have often aggressively partnered with the “apparatus of violence in order to be able to repress free speech”, and often get away with it.

“People just operate under the assumption that universities are bastions of democracy, that they’re designed to preserve free speech and that these are kind of unfortunate accidents in the story.

“I actually think that universities institutionally have generally been deeply committed to trying to regulate and maintain and manufacture a certain level of baseline consent to dominate power relations. And for that reason, I think it requires ongoing vigilance.”

请先注册再继续

为何要注册?

- 注册是免费的,而且十分便捷

- 注册成功后,您每月可免费阅读3篇文章

- 订阅我们的邮件

已经注册或者是已订阅?