Time to think

Has Big Tech lived up to its purportedly altruistic ideals? Google’s unofficial motto of “don’t be evil” suggests that its intention was always benevolent, despite the deceptive and unethical practices it has adopted in a number of areas – not least facial recognition software, which reportedly exploited homeless people of colour.

Bill Gates now heads one of the world’s largest private charities, yet the company he founded, Microsoft, is relatively open about its compliance with state censorship in China. Apple contributes millions of dollars in financial aid for disaster relief but has a grim track record when it comes to working conditions, including allegations that it has potentially directly or indirectly benefited from forced Uyghur labour. Amazon’s working practices have also come under serious scrutiny, especially in the wake of the pandemic and the recent destruction of one of its giant US warehouses by a tornado. As for Facebook (now Meta), there is blood on its hands after it failed to prevent its platform being used to incite violence against the Rohingya minority in Myanmar.

But computing was never about the greater good, no matter what those Big Five firms claim. It was, and still is, about the greater exploitation of productivity. From the moment humans sharpened a flint, technology has been a way to make tasks more effective – and, nowadays, that’s done for profit. Before we had digital computers, we had human ones: people – usually women, as it was seen as a low-status role – employed to calculate and repeat those calculations by hand. The goal is to maximise production, to capitalise, to hold market power.

THE Campus views: What can universities learn from Amazon?

It doesn’t stop there, though. Big Tech reaches much further than might ever have been expected in the industry’s early days. The rise of machine learning – and the access to the vast quantities of user-centred data that spurs it on – means that Silicon Valley corporations have a profound grip on our lives. They hold vast power and if we want to enjoy the conveniences of a digital society then we can’t entirely avoid that. It’s a trade-off. Our society thrives on interconnections and limitless access to information; at times, it needs it. Imagine this pandemic without that online space. Imagine getting through a day without your smartphone.

At present, the major developments in AI come from those with the money, the talent and the data. The world’s most powerful nations, the US and China, are also the AI superpowers. Start-ups making new leaps are acquired by established corporations. University researchers are poached into business, lured by high wages and (potentially) better working environments. The resources of grant-funded projects are microscopic when compared with the big players’ budgets. Can universities really have much influence on where the AI revolution takes us?

They can – but the roles have changed. Universities are now working on a different scale, but we can still innovate, still foster ideas, still advocate for altruistic and beneficial technology. We can explore data and push for open science. We can work with industry to co-create and advise. And we can hold a mirror up to Big Tech firms and force them to look at themselves.

In the past few years, there has been a marked increase in the academic study of AI ethics. Although it was already an important subject in its own right, the escalation of algorithmic decision-making (and the fallout when those decisions go wrong) pushed ethics into the spotlight. The UK House of Lords’ Select Committee on Artificial Intelligence published its report in late 2018. It was well received by the community and acknowledged that ethical development was key, stating: “The UK’s strengths in law, research, financial services and civic institutions mean it is well placed to help shape the ethical development of artificial intelligence and to do so on the global stage.”

Big Tech is STEM-focused and it often treats other fields as unimportant, dismissing centuries of philosophy, arts, humanities and social science as irrelevant. But it is learning that it needs to engage. An increased emphasis on the responsible development of technology means it has listened to experts outside its walls. Some of this is regulatory pressure, much comes from academia, but there is also a heartening increase in public awareness, engagement and activism. It didn’t stop Google from firing its ethics researchers Timnit Gebru and Margaret Mitchell for self-critical research, but it did force it to re-evaluate its practices following the backlash.

But universities shouldn’t be too smug. We need to get our own house in order too. When working with industry, look for strings. Plenty of institutions receive money from tech companies and it’s not unheard of for funding to cloud judgement – something the Massachusetts Institute of Technology learned the hard way when it accepted donations from Jeffrey Epstein even after his conviction as a sex offender.

The slow and contemplative academic practices, often mocked by the fast-moving tech world, offer space to reflect on how new technologies are developed, deployed and used. We are an antidote to the goal-focused business world.

That said, we cannot be too slow. We are also perpetrators and victims of our own productivity practices. If we wait anything from a few months to a few years for a journal article to be published, we’ve missed the boat. We have to rethink our own ways of responding and interacting. When it comes to Big Tech, we can contribute in new ways, but we can’t afford to be left behind.

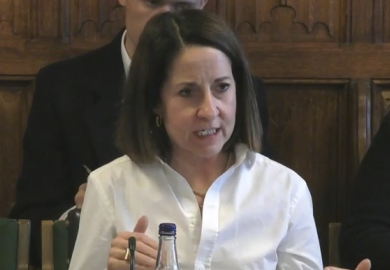

Kate Devlin is reader in artificial intelligence and society at King’s College London.

Keeping good company

How does it all end for humanity? Will it be a giant meteorite crashing from the sky, like it was for the dinosaurs? Or a catastrophic thermonuclear explosion, as we all thought it would be during the Cold War? Or a global pandemic many times deadlier than Covid-19?

Australian philosopher Toby Ord argues in his 2020 book The Precipice: Existential Risk and the Future of Humanity that, in fact, the greatest threat is posed by an artificial super-intelligence poorly aligned with human values. Indeed, he bravely (if that is the word) estimates that there is a 10 per cent chance of such an end in the next century.

As someone who has devoted his whole professional life to building artificial intelligence, you might think such concerns would give me pause for thought. But they do not. I go to sleep at night and try to dream instead of all the promise that science in general and AI in particular offer.

It may be decades, or even centuries, before we can build an AI to match human intelligence. But once we can, it would be very conceited to think a machine could not quickly exceed it. After all, machines have many natural advantages over humans. Computers can work at electronic speeds, which far exceed biological ones. They can have much greater memory, ingesting datasets larger than human eyes can contemplate and never forgetting a single figure. Indeed, in narrow domains such as playing chess, reading X-rays, or translating Mandarin into English, computers are already superhuman.

But the fact that more general artificial super-intelligence remains a distant prospect is not the reason that I am unconcerned by its existential implications. I’m unconcerned because we already have a machine with far greater intelligence, power and resources at its disposal than any one individual. It’s called a company.

No person on their own can design and build a modern microprocessor. But Intel can. No person on their own can design and build a nuclear power station. But General Electric can. Such collective super-intelligence is likely to surpass the capacities of mechanised super-intelligence for a long time to come.

That still leaves the problem of value alignment, of course. Indeed, this seems to be the main problem we face with today’s companies. Their parts – the employees, the board, the shareholders – may be ethical and responsible, but the behaviours that emerge out of their combined efforts may not be. Just 100 companies are responsible for the majority of greenhouse gas emissions, for instance. And I could write a whole book about recent failures of technology companies to be good corporate citizens. In fact, I just have.

Consider, for example, Facebook’s newsfeed algorithm. At the software level, it’s an example of an algorithm misaligned with public good. Facebook is simply trying to optimise user engagements. Of course, user engagement is hard to measure, so Facebook decided instead to maximise clicks. This has caused many problems. Filter bubbles. Fake news. Clickbait. Political extremism. Even genocide.

This flags up a value alignment problem at the corporate level. How could it be that Facebook decided that clicks were the overall goal? In September 2020, Tim Kendall, who was director of monetisation for Facebook from 2006 through 2010, told a Congressional committee that the company “sought to mine as much attention as humanly possible...We took a page from Big Tobacco’s playbook, working to make our offering addictive at the outset…We initially used engagement as sort of a proxy for user benefit. But we also started to realise that engagement could also mean [users] were sufficiently sucked in that they couldn’t work in their own best long-term interest to get off the platform...We started to see real-life consequences, but they weren’t given much weight. Engagement always won, it always trumped.”

It is easy to forget that corporations are entirely human-made institutions that only emerged during the industrial revolution. They provide the scale and coordination to build new technologies. Limited liability lets their officers take risks with new products and markets without incurring personal debt – often funded, in the modern era, by plentiful sources of venture capital, alongside traditional bond and equity markets.

Most public listed companies came into being only very recently, and many will soon be overtaken by technological change; it is predicted that three-quarters of the companies on the Standard & Poor’s 500 index today will disappear in the next decade. About 20 years ago, only four of the 10 most valuable publicly listed companies in the world were technology companies: the industrial heavyweight General Electric was top, followed by Cisco Systems, Exxon Mobil, Pfizer and then Microsoft. Today, eight out of the top 10 are digital technology companies, led by Apple, Microsoft and Alphabet, Google’s parent company.

Perhaps it is time to think, then, about how we reinvent the corporation to suit better the ongoing digital revolution. How can we ensure that corporations are better aligned to the public good? And how do we more equitably share the spoils of innovation?

This is the super-intelligence value-alignment problem that actually keeps me awake at night.

And this is where the academy has an important role to play. We need scholars of all kinds to help imagine and design this future. Economists to design the new markets. Lawyers to draft new regulation. Philosophers to address the many ethical challenges. Social scientists to ensure humans are at the centre of social structures. Historians to recall the lessons from past industrial change. And many others from across the sciences and humanities to ensure that super-intelligence, both in machines and corporations, creates a better world.

Toby Walsh is an ARC Laureate Fellow and Scientia professor of artificial intelligence at UNSW Sydney and CSIRO Data61. His most recent book, 2062: The World that AI Made, explores what the world may look like when machines match human intelligence. His next book, out in May, is Machines Behaving Badly: The Morality of AI.

Driving responsibility

In 1995, Terry Bynum and I wrote a feature article for Times Higher Education that asked, “What will happen to human relationships and the community when most human activities are carried on in cyberspace from one’s home? Whose laws will apply in cyberspace when hundreds of countries are incorporated into the global network? Will the poor be disenfranchised – cut off from job opportunities, education, entertainment, medical care, shopping, voting – because they cannot afford a connection to the global information network?” More than a quarter of a century on, I am still asking.

By now, there exists a deep-seated global dependency on digital technology. The Canadian government’s January 2021 report, Responsible Innovation in Canada and Beyond: Understanding and Improving the Social Impacts of Technology, calls for solutions that are “inclusive and just, undo inequalities, share positive outcomes, and permit user agency”. I agree. To that end, we need an ever-greater emphasis on what we should now call digital ethics, defined as the integration of digital technology and human values in such a way that the former advances rather than detracts from the latter.

Two recent ethical hotspots reinforce this need. One is the estimated 360+ million over-65s who are currently on the “wrong” side of the digital divide; as the world has come to rely even more heavily on digital technology during the pandemic, these people have been put at greater risk. Another example is the 700+ UK sub-postmasters who were accused of theft on the basis of a faulty digital accounting system. Unverified digital forensics was the only evidence used to obtain the convictions – many of which have now been overturned.

Only virtuous action can promote an ethical digital age. Rules may offer some guidance, but they are a double-edged sword. Within industry and government, a compliance culture has taken firm hold, strangling any opportunity for dialogue and analysis of the complex socio-ethical issues related to digital technology. Organisational silo mentalities must also be replaced by inclusivity and empathy.

Genuinely virtuous action can be promoted in three different ways. Top-down drivers are typically impositions by bodies of authority, which dictate where resources should be placed to achieve some overall goal. Middle-out drivers involve empowering all those within an organisation to propose new ideas, initiate change and support it. Bottom-up drivers emanate typically from grassroots collective action, often citizen-led.

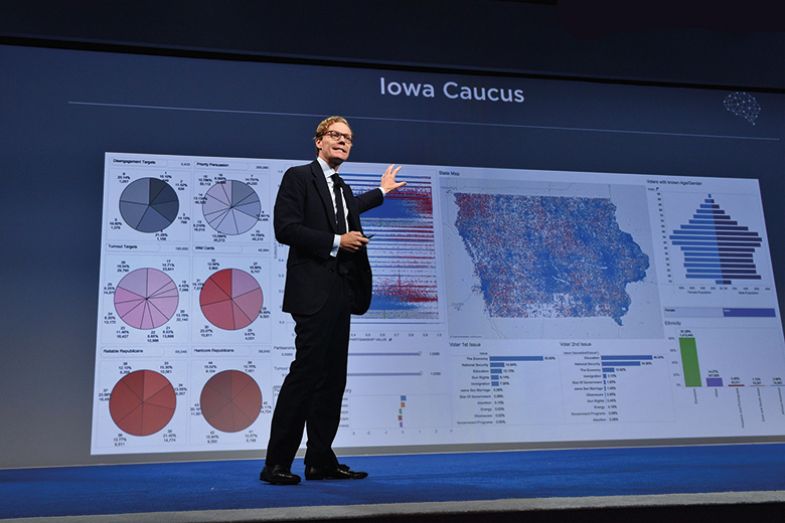

Whistleblowing is a bottom-up driver and has been successfully used to expose and sometimes rectify unethical activity, primarily related to personal data. In 2013, Edward Snowden leaked highly classified information from the US National Security Agency, revealing its numerous global surveillance programmes, conducted with the cooperation of telecommunication companies and European governments. In 2018, Christopher Wylie revealed that Cambridge Analytica, a political consultancy that worked for the Trump campaign, had illegally obtained Facebook information about 87 million people and used it to build psychological profiles of voters and then spread narratives on social media to ignite a culture war, suppress black voter turnout and exacerbate racist views. In 2021, Frances Haugen disclosed thousands of Facebook’s internal documents to the Securities and Exchange Commission and The Wall Street Journal. This has led to investigations into Facebook’s updating of platform-driving algorithms, impacts on young people, exemptions for high-profile users and response to misinformation and disinformation.

Some ethical hotspots may be obvious while others may not, but all must be addressed. This can only be achieved through effective digital ethics education and awareness programmes that promote proactive individual social responsibility, both within work and beyond it, while taking into account both global common values and local cultural differences. Discussion, dialogue, storytelling, case study analysis, mentoring and counselling are all useful techniques.

The way forward, then, is a middle-out, interdisciplinary, lifelong-learning partnership of primary education, secondary education, further education, higher education and beyond. Universities have a key role to play. While some have introduced digital ethics into their technology degrees, others have not, and most existing courses are elective. This must change. Relevant digital ethics elements should be mandatory across undergraduate and postgraduate programmes within many disciplines, including technology and engineering, business and management, science, finance and law.

Nurturing practical wisdom and developing individuals’ confidence and skills to act responsibly and ethically will increase graduates’ awareness and commitment as they move into the world of work. However, to make a lasting difference, universities must engage politically, commercially, industrially, professionally and internationally to influence the strategies and attitudes of those beyond their own walls. They must develop and promote a new vision of the digital future that is theoretically grounded but also pragmatic if industry and government are to embrace it.

We must accept and adjust to the fact that we are all technologists to a lesser or greater degree. How we educate our future generations must reflect this change to ensure that the digital age is good for each individual, as well as for the world at large.

Simon Rogerson is professor emeritus at De Montfort University. He founded the Centre for Computing and Social Responsibility, the international ETHICOMP conference series and the Journal of Information, Communication and Ethics in Society. He was Europe’s first professor of computer ethics. His latest book is The Evolving Landscape of Ethical Digital Technology.

Field marshalling

The biggest peril stemming from Big Tech is nothing less than the loss of human autonomy for the sake of increasing efficiency.

Even when decisions affecting a person are made by other human beings – such as our family, government or employer – we understand the power relationships between those decision-makers and ourselves, and we can choose to obey or to protest. However, if the computer says “no” in a system that we cannot escape, protest becomes meaningless, as there is no “social” element to it.

Furthermore, Big Tech robs us of our core competencies, which are important aspects of our intelligence and, ultimately, our culture. Take map-reading and orientation. While it may be both efficient and convenient to rely on Google Maps, habitual use means that we lose our ability to decide how to drive from A to B. More importantly, we lose the ability to navigate and understand our geographical environment, becoming ever more dependent on technology to do this for us.

Many people may not care about this particular trade-off between convenience and ability. Others may barely even notice it. But the tech-driven loss of decision-making and other abilities is pervasive. A more concerning example regarding autonomy is the profiling enabled by tracking technology when we browse the World Wide Web and engage on social media. The aggregation of data about our preferences and interests is all-encompassing; it does not merely include our shopping choices, but also our aesthetics, our religious or political beliefs, and sensitive data about health or sexual proclivities. Profiling enables the micro-targeting of what we consume – and this includes news results, resulting in the widely lamented establishment of so-called filter bubbles or echo chambers.

The algorithms are serving us the content we apparently crave, or at least agree with, but they pigeonhole us into groupings that we cannot escape. Profiling deprives us of the autonomy to decide which content to access and, more broadly, the skill of media literacy. The consequences are serious, as the polarisation of opinion and the micro-targeting of advertising directly affect democracy, as was illustrated by the Facebook-Cambridge Analytica scandal.

Moreover, while the extraction of knowledge from big data has many economic, social and medical benefits, there are numerous instances where more knowledge is positively harmful. For example, imagine a “free” online game that allows the profiling of individuals regarding their likelihood of succumbing to early onset dementia. Learning of their risk profile may affect people’s mental health. Sharing the information with companies may lead to individuals being denied employment, credit or insurance. It would undermine the spreading of risk on which the whole health insurance industry is currently predicated.

Finally, it is not only what Big Tech does to us, but its sheer power that is disconcerting. At its core, Big Tech is based on the networks connecting us. The more people are connected to a network, the more useful it becomes, leading inevitably towards monopolies. This is true for e-commerce, online auctions, search engines and social media.

In addition, Big Tech firms’ size and power allows them to invest heavily in research and development, meaning they are always ahead of the innovation game. This makes it difficult for governments or publicly funded research institutions, such as universities, to keep up. In turn, it means that the research and innovation agenda is geared towards making Big Tech companies richer and even more powerful.

This loop has created a mindset that sees unbridled innovation through big data, data mining and artificial intelligence as imperative, regardless of ethics and the impact on humanity: the next, inevitable evolutionary step in human development. Universities should question this inevitability through their core missions in teaching and research. They must guard their independence from both politics and commercial interests.

In particular, universities should encourage truly interdisciplinary research, integrating all subjects as equal partners, including in terms of the funding and leadership of projects. It is wrong, for instance, that most funding from UK Research and Innovation in the area of artificial intelligence and autonomous systems has gone to fund computer science and engineering projects, offering little, if any, opportunity for ethicists, lawyers, humanities and social science researchers to critically reflect on what is good for human progress as a whole.

Universities should lead the dialogue about Big Tech and enable cross-fertilisation between disciplines – and mindsets. After all, how you define the challenges created by Big Tech depends on your perception of them. Accordingly, teaching in computer science and engineering should also contain elements of ethics, law (such as data protection law), social sciences and humanities.

Of course, however public resources are divided up, they will always be dwarfed by those available to the Big Tech companies. For that reason, some people perceive the power relationship between university research/teaching and Big Tech innovation as akin to that between a person with a peashooter and Godzilla. But sometimes the question of who comes out on top is not only determined by power conferred by technology and money. It is also important to consider who can influence the mindset of human beings – and this is precisely where universities have a big stake in the game.

Julia Hörnle is professor of internet law at the Centre for Commercial Law Studies, Queen Mary University of London.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber?