Universities should not bend to the demands of employers who want computer science graduates to know specific programming languages, and instead focus on the fundamentals of the subject.

This was one of the arguments made at a conference last month that brought together academics and employers amid deep concern over high levels of unemployment among computer science graduates in the UK.

Next Steps for Computer Sciences in Higher Education, a conference organised by the Westminster Higher Education Forum, heard from Nigel Shadbolt, a professor at the University of Oxford’s department of computer science, who is currently writing a report on the future of the subject for the Department of Business, Innovation and Skills, due out imminently.

One reason for relatively poor employment rates could be that some universities have expanded too far and too fast. Sir Nigel showed delegates a graph which demonstrated a loose correlation between cohort size and unemployment – some universities, graduating 600 to 700 students a year, had a 25 per cent unemployment rate.

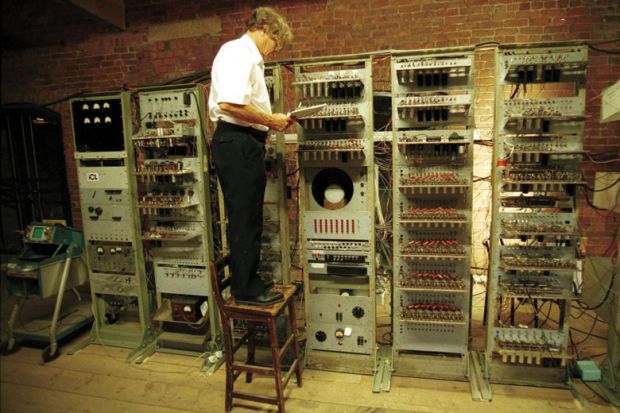

Delegates also heard from Steve Pettifer, reader in the School of Computer Science at the University of Manchester, who pointed out just how dramatically computing had changed since its birth in the mid-20th century.

The Manchester Small Scale Experimental Machine – nicknamed “The Baby”, despite taking up an entire room – was the first to store information electronically. Just under 70 years later, computers have shrunk to fit in our hands and transformed how we experience life. And yet, pointed out Dr Pettifer, recent computer science graduates will be working for almost as long again – until about 2066.

“Graduates are going to be working for almost the entire history of modern computing,” he said, making their future jobs “almost unimaginable”.

Universities are under pressure from businesses to teach graduates how to use the latest programming languages, Dr Pettifer explained. But this can be disastrously short-sighted. About 2009-10, companies were demanding that graduates knew how to use Symbian – then the dominant smartphone operating system.

But within a few years, Symbian had been wiped out by Google’s alternative, Android.

“If we focus on these kinds of bits of detail we’re going to be flailing around as higher education institutions, trying to figure out what the latest program is,” he said. More important than knowing specific programming languages were the “fundamental skills” and concepts of computer science, he argued.

He said that there were some signs that industry was being more “realistic” in its expectations. Ten years ago, they would say “we’re not interested unless you know this, this and this”, he said.

“Now they are asking for fundamental knowledge and will train them [graduates] in specific skills,” he added.

But Manchester was nonetheless keen to get students work experience before graduation, employing an academic as an “employability tutor” to “shepherd them through the process” of setting up placements, he said.

Teamwork skills are crucial in landing a job, said Hugh Cox, founder of the software company Rosslyn Analytics, and sometimes sorely lacking in the computer science world. Even after one to two years of working for his company, Mr Cox said that he still had developers “who I can’t put in front of a client”.

He added that graduates often “don’t know the first thing about how a business is run”, and suggested that universities teach computer scientists “an idiot’s guide to accounting”.

But teaching teamwork does not automatically create it. Sally Smith, dean of the School of Computing at Edinburgh Napier University, said that arguments during student group work had in the past become very heated – so much so that the police had to be called.

Accreditation misgivings

Many of those running computer science courses are mutinous at what they see as pointless, bureaucratic accreditation processes, judging from comments made at Next Steps for Computer Sciences in Higher Education.

About 80 per cent of computer science courses in the UK are accredited by the BCS (formerly the British Computer Society). The process is entirely voluntary.

Susan Eisenbach, head of the department of computing at Imperial College London, said that accreditation in general was a “huge administration overhead” taking two terms of work to simply produce the right documentation. But in the end, she doubted whether paperwork produced by Imperial was even read by accreditors.

“It was a complete waste of time for everybody,” Professor Eisenbach said.

She said that students never said they wanted their course accredited, nor had it ever come up with employers. The accreditation process was “not looking at content in any way at all”.

“The accreditors are of very variable quality,” she added.

She did throw accreditation one small bone: it had helped Imperial to incorporate transferable skills, such as group work and ethics, into the curriculum.

Bill Mitchell, director of the BCS’ academy of computing, was candid about the problems the system faced.

A workshop with employers held last year had exposed “a lot of confusion” over what accreditation was for, and Dr Mitchell acknowledged that “we need to look fundamentally at what the purpose of accreditation is and how we explain it to other people".

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login