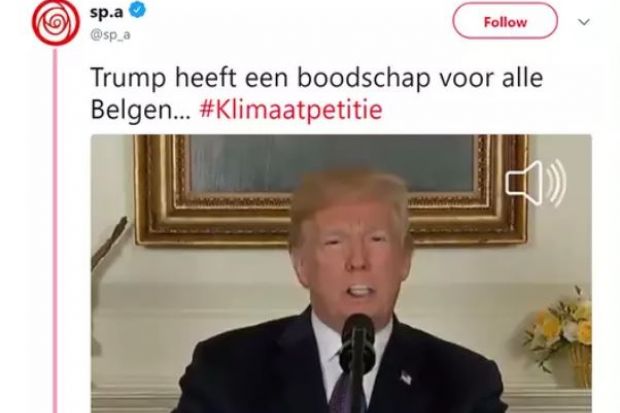

In May last year, a video surfaced online in which Donald Trump berated Belgium for not doing enough to tackle climate change.

“As you know I had the balls to withdraw from the Paris climate agreement. And so should you,” the US president appeared to say.

If this sounds slightly unbelievable, that’s because it was: the video was a fabrication by the Flemish Socialist Party. At the end of the video, “Trump” admits as much, although the clip reportedly still fooled some online commentators.

This was one of the early – and relatively crude – examples of a political “deepfake”, a new breed of sometimes spookily realistic videos that some fear could derail democracy itself.

“You can get the president of the United States to say whatever you want to say and make it look fairly realistic with relatively little time and effort,” warned Matthew Wright, director of the Center for Cybersecurity Research at the Rochester Institute of Technology and one of a number of academics racing to find detection systems for deepfakes before they proliferate across the web.

Manipulated videos and images – “shallowfakes” – have been around for decades. But deepfakes are made using so-called deep learning, a type of artificial intelligence that in some respects mimics the human brain. This allows “just about anybody who has basic fluency with computers” to automate a previously laborious manual effort requiring skills with a program such as Photoshop, explained Professor Wright. “They are getting better” all the time, he said

Earlier this year, Professor Wright and his team won a $100,000 (£82,600) grant from the Ethics and Governance of AI Initiative, part of a wider package of funding to help newsrooms use machine learning to improve their coverage. His team hopes to create a website where journalists with “legitimate credentials” can use Rochester’s own checks on videos suspected of fakery.

Opening up such a website to the broader public, however, would allow deepfake creators to figure out how Professor Wright’s detection tool worked – and adjust their own methods to avoid being caught.

There is an “arms race” between academics’ detection systems and deepfake creators, said Edward Delp, a professor at Purdue University specialising in media security and one of the researchers involved in a project to create deepfake detection tools for the US’ Defense Advance Research Projects Agency.

The risk is not just of fake videos of politicians. Such technology also allows the creation of “fake revenge porn” – where photos of a victim are used in fabricated pornography – something that has already been banned in Virginia. Meanwhile, Professor Wright has been contacted by the risk team of a large corporation; he assumes they are worried about deepfakes of their chief executive.

His aim is to create detection tools for others. But should academics directly intervene to test a video’s veracity? Earlier this year, Heinz-Christian Strache, who was then the vice-chancellor in the Austrian government, was caught by hidden cameras in an Ibiza holiday villa offering to trade public contracts for illegal donations and positive media coverage for his far-right Freedom Party. He subsequently resigned, triggering fresh elections set for September. Experts from Germany’s Fraunhofer Institute for Secure Information Technology helped journalists who obtained the video to verify that it was genuine.

For Professor Delp, though, this kind of direct testing, despite “many, many” requests, was off limits. “The downside is too high,” he said, as “you could get sucked into legal problems, or political debates – I’m really not interested in that.” Instead, he saw his role as developing underlying methods that can be deployed by others.

It is also unclear whether this kind of post hoc fact-checking will change anyone’s mind.

“We can label a video as being fake, but the question is whether people are going to believe that it’s fake. And I don’t know how to do that problem,” Professor Delp said.

The risk is that videos get out, go viral, and are viewed by millions before they are debunked – with the damage already done. “Our brains don’t work that way…you can’t unsee,” said Professor Wright.

This is why academics tackling deepfakes say they need the major online platforms to install automatic detection: flagging and blocking systems to stop deepfakes before they go viral. Professor Delp has talked to social media companies about this issue, but did not disclose which ones.

“We [academics] can make a difference, but we need the cooperation of the social media companies,” said Hany Farid, a professor at the University of California, Berkeley, specialising in image analysis. “To this end, we are working with Facebook under a research grant to operationalise some of our forensic work in detecting deepfakes and misinformation in general. We are also working with Google on similar efforts,” he said.

POSTSCRIPT:

Print headline: ‘Debunk fake videos before they go viral’

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login