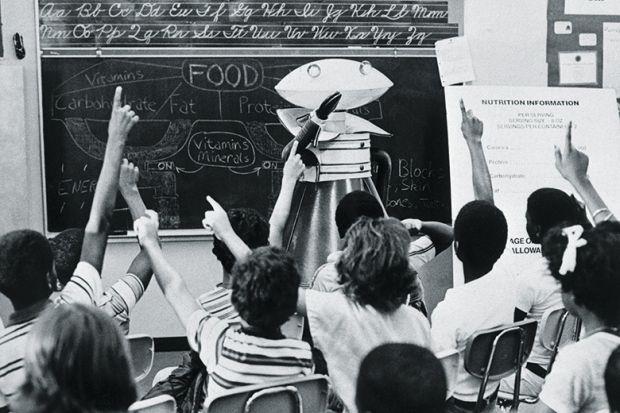

While some of my esteemed colleagues are heralding the imminent arrival of robot teachers, stating that current advances will bring “the greatest revolution in education since the printing press”, I have to disagree.

This is not because I am a latent technophobe. Regent’s University London, for example, is currently investigating the very latest assistive technologies, including how indoor navigation systems will support the visually impaired.

Developments in machine-assisted learning and support are highly valuable, welcome and exciting. However, the idea that machines and AI will eventually take over from humans fails to take into account the most important factor – how we learn as individuals.

Debate over the use of computers and AI in future education is not new, and many of us have worked in this field since the late 1960s. There is no doubt that AI will ultimately play a major role. The big question is, what will this role be?

William Norris had ambitious plans for the PLATO system, which sought to change the roles of teachers, developed at Control Data Corporation between 1960 and 2006.

Richard Atkinson and Patrick Suppes at Stanford University founded the Computer Curriculum Corporation in 1967, providing student-focused instruction, and The Open University pioneered large-scale computer-based learning with underlying AI in the mid-1970s.

All these systems failed to note the social aspects of learning and the ways in which learner isolation creates a breakdown of social norms and values.

From kindergarten through to postdoctoral level, the transfer of knowledge and skills is also about communication. This involves values, social skills, working in teams, experiencing different cultures and roles in society, and becoming good citizens.

Learning online is difficult. While it allows for the easy download of information and, using simple algorithms, offers useful role-based learning in subjects such as accountancy and law, creative fields and some areas of management are much more challenging.

Education should help everyone, from admin tutors to students, become more sophisticated and better prepared to interact as humans. Online learning fails to perform in this sense.

The issue is finding a balance between “bricks and clicks”. What you need to do in a room that is full of people versus what you can do on your own highlights how people learn in different ways. Some need to go over the same ground repeatedly, while others apply knowledge to problem-solve and interact with their peers.

Technology can also monitor. I previously ran an enterprise college that used a sophisticated program to monitor students’ behaviour online in real time. This had the advantage of flagging individuals who were not doing enough, or who were experiencing problems and falling behind, allowing them to be supported. This also proved true for tutors who were tempted to exaggerate the length of time that they worked online.

Was it a success? Having been online for weeks, it became apparent that students had barely contacted any lecturing staff, despite the use of friendly, tailored avatars, designed to communicate a reassuring personality to online interactions.

The system offered an option to switch to voice interaction with tutors to help work through any problems. Despite this, after weeks of observation, we found that students tended to avoid times when they could see that tutors were online.

Instead, they formed online classrooms, sharing presentations and discussions. It became clear that it was not helpful to have sessions run by tutors. We left the virtual rooms open, and the students started to take the space over for themselves.

The problem with segmented learning online is that most systems are based on a series of false assumptions.

PLATO assumed that teachers could learn a programming language called “Tutor”, but most were unable to do so. They really needed the support of an AI expert, a pedagogical expert, a designer, a psychologist…the list goes on.

Another mistake was the belief that all learners are the same. In fact, people need materials presented in different ways because of issues including gender, nationality, culture, age, and learning ability. This may impact the design of teaching illustrations, animations, typeface and language.

The biggest danger of believing that robots will one day dominate our education system is that of placing too much reliance on AI, without allowing for proper monitoring and support.

It is quite possible to build an expert AI teaching system featuring algorithms that monitor student progress and differences, sell it for an astronomical price and then, as learners sit isolated in their rooms, watch the student dropout rate rapidly multiply.

Just look at the notoriously high failure rates for students attempting to complete massive open online courses (Moocs).

The fictional Dr Susan Calvin, a character appearing in Isaac Asimov’s Robot series of short stories, was the chief robopsychologist at US Robots and Mechanical Men Inc., the major manufacturer of robots in the 21st century. She neatly explored the Three Laws of Robotics, namely that a robot may not injure a human or, through inaction, allow one to come to harm; a robot must obey orders given to it by humans except where such orders would conflict with the First Law, and a robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

It is sometimes overlooked that Asimov himself made slight modifications to these three laws in various stories, further analysing how robots might interact with humans and each other. In later fiction, after robots had taken responsibility for the governing of whole planets and human civilisations, he added a fourth, or zeroth law, to precede the others; a robot may not harm humanity or, by inaction, allow humanity to come to harm.

We need to be very careful how civilisation progresses, especially in the field of robot teaching, or this fourth law may be broken to the detriment of us all.

Aldwyn Cooper is the vice-chancellor of Regent’s University London.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber?