New Zealand has cancelled the upcoming round of its national research assessment, in what is widely regarded as a death blow for the decades-old exercise.

Tertiary education minister Penny Simmonds has decided not to proceed with data collection for the Performance-Based Research Fund (PBRF), which guides the allocation of NZ$315 million (£150 million) of block grants each year.

The decision, revealed by the Tertiary Education Commission (TEC), coincided with the announcement of a higher education review whose terms of reference include a “particular focus” on the PBRF.

Originally scheduled for this year, the quality evaluation had already been delayed until 2025 and then 2026 by the former Labour government.

Data collection for the first PBRF occurred in 2003, with subsequent rounds in 2006, 2012 and 2018. Asked whether the TEC had “now served its purpose”, TEC chief executive Tim Fowler said it was an “open question”.

“We’re getting to the point where…very marginal gains are being made in comparison to what we saw for the first decade,” Mr Fowler told the Education and Workforce Select Committee in February.

“It has…provided us with really good, robust evidence of the quality of the research that has been delivered across our institutions. On the downside, it’s extremely compliance-heavy for us to run it. It’s a back-breaking six-year gestation period every round. The institutions themselves have to put in a lot of administrative effort to make it work.”

Universities New Zealand chief executive Chris Whelan said the cancellation was “sensible” in the context of the higher education review and a concurrent science appraisal.

“Both…will consider the role of the PBRF and, between them, are likely to suggest different ways of measuring and assessing effectiveness and impact,” Mr Whelan said.

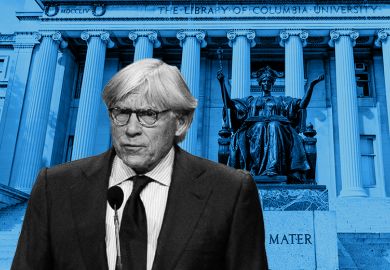

The advisory groups conducting the two reviews are both headed by former prime ministerial science advisor Peter Gluckman, who led a 2021 evaluation of the UK’s Research Excellence Framework (REF). His advice helped shape a massive shake-up of the REF, with individual academics no longer obliged to participate in the exercise.

In Australia, a review by Queensland University of Technology vice-chancellor Margaret Sheil led to the scrapping of that country’s national research exercise last August, a year after the government had postponed the exercise.

Higher education policy analyst Dave Guerin said he expected the same fate for the PBRF. “I suspect that the evaluation of academics’ portfolios will go,” said Mr Guerin, editor of the Tertiary Insight newsletter.

“It’s a huge compliance exercise and it provides little value these days. The early evaluations provided some value in changing behaviour, but the behaviour has changed, and we’re adding a huge amount of compliance costs on academics and research managers for little impact.”

Mr Guerin said the PBRF had been among several UK-influenced exercises introduced decades ago when the absence of research performance measures had aroused public scepticism. “A lot of people said: ‘What are all these academics doing?’

“But those problems have been addressed. The management systems of institutions have changed. There isn’t the mythical academic who’s just twiddling their thumbs. That stereotype has gone – instead you’ve got the mythical academic filling in lots of forms.”

John Egan, associate dean of learning and teaching at the University of Auckland, said that the workload “burden” generated by the PBRF impeded rather than enhanced research productivity.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber?