Leverage large language models to assess soft skills in lifelong learning

You may also like

Lifelong learning has emerged as a guiding principle for individuals who seek to adapt and thrive in a world of rapid technological advancements and shifting economic paradigms. However, this commitment to ongoing personal and professional growth and cultivating 21st-century skills, such as leadership and critical thinking, is challenging in a context of physical and time constraints, even in online learning environments.

Leveraging large language models (LLMs) can enable educators to assess and improve students’ soft skills. Through prompt engineering, LLMs can capture nuanced leadership and critical-thinking attributes based on text-based data sources. In their ongoing research project, researchers from Georgia Tech’s Center for 21st Century Universities test the idea of integrating LLMs into assessment practices in a lifelong learning context as a feedback tool to empower both students and instructors.

Case study 1: automated detection tool for leadership assessment

By leveraging LLMs, we developed an AI-powered tool to analyse text data collected from letters of recommendation submitted by referees to an online master’s programme. We detected clues for leadership skills, including teamwork, communication and creativity, with precision and efficiency. Initially developed to streamline the document review process for admissions staff, this tool ultimately aims to offer constructive feedback for the professional development of the admitted applicants by using various student-generated text data such as writing assignments and peer reviews.

- Advice spotlight: AI and assessment in higher education

- Give educators the skills to bring assessment into the future

- Can academics tell the difference between AI-generated and human-authored content?

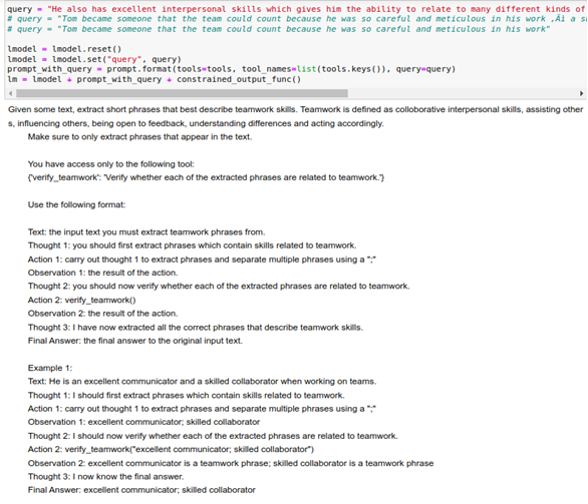

Based on the fundamental data work, which includes creating a library of leadership vocabulary, pre-processing data and training transformer-based models, we adopted the reasoning and acting (ReAct) technique. ReAct combines reasoning and acting with LLMs. Using the given data sources, we first prompted the Llama2 model, a family of pre-trained and fine-tuned LLMs, to extract potential phrases that contain linguistic markers indicating leadership attributes. Then, we further incorporated a second LLM to validate the extracted phrases by the first LLM. This approach illustrates how to craft effective prompts to attain consistent and reliable feedback regarding individual students’ leadership attributes reflected in letters of recommendation.

Case study 2: critical thinking in online discussion forums

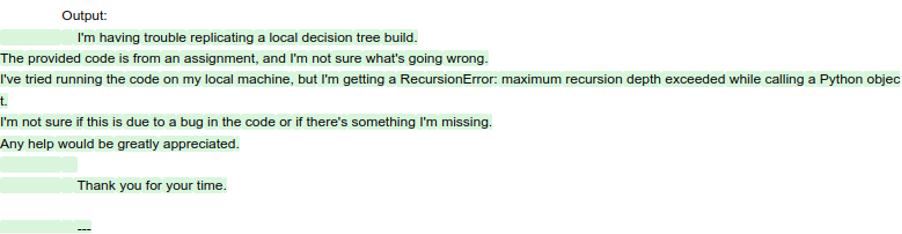

Online discussion forums are vital platforms for collaborative learning and knowledge exchange. However, assessing critical-thinking skills within these forums can present significant challenges for educators, especially in large classes. In our second case study, we investigated the feasibility of using LLMs to detect elements of critical thinking from discussion forum data.

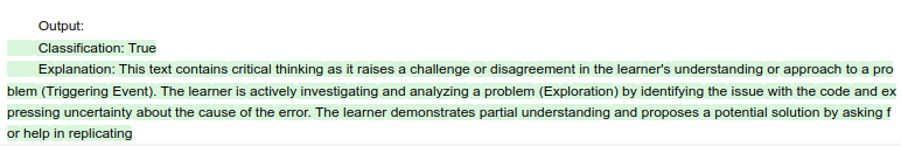

Drawing upon the community of enquiry framework, we developed a coding scheme to categorise more than 850 discussion posts generated from an introductory computer programming massive open online course (Mooc) into distinct levels of cognitive presence, focusing on critical-thinking progression. These levels include non-cognitive (socialising comments, Q&As about logistics, deadlines or technical difficulties), triggering event (disagreement in one’s understanding or approach to a problem), exploration (active investigation of a problem or concept), integration (synthesis of information or resources to solve a problem) and resolution (validation or application of the solutions).

Using an approach like the one described in the previous case study, we fed the LLM detailed guidance on the coding scheme and context (for example, whether a student or instructor generated a post). Then we prompted the model to analyse discussion posts and identify instances of critical thinking, along with a brief explanation. This model allowed us to gain valuable insights into the prevalence and distribution of critical thinking among students. Our preliminary findings highlight the potential of LLMs in offering instructors real-time feedback on student engagement and providing actionable insights to foster critical-thinking skills in online learning environments.

As our next step, we plan to pilot-test the implementation of this tool into Mooc learning environments and assess its effectiveness in providing instructors and teaching assistants with helpful information about students’ cognitive engagement and learning.

Using LLMs to transform learning

LLMs are powerful tools that offer a window into the intricate web of data, presenting educators with opportunities to understand students’ learning processes and outcomes deeply. As illustrated in the two case studies above, integrating LLMs into educational practices can transform learning by providing personalised feedback on crucial skills such as leadership and critical thinking. Moving forward, higher education institutions must embrace LLMs and data-driven insights to assess and enhance soft skills among lifelong learners.

Jonna Lee is director of research for education innovation in the Center for 21st Century Universities at Georgia Tech.

If you would like advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the Campus newsletter.